Question

No. 01 (20)

Select

an area of computer systems (for example, processor design, networks,

operating systems, or databases), review articles on performance

evaluation in that area and make a list of benchmarks used in those

articles.

Question

No. 02 (30)

Make

a complete list of metrics to compare

Two

personal computers

Two

database systems

Two

disk drives

Two

window systems

Best

of luck

CSS ASSIGNMENT NUMBER: ___________2___________________________

STUDENT ROLL NUMBER: ___Sp-2014-MSC-CE 011___________________

STUDENT NAME: ______________Kashif Islam ________________________

Area Selected: Android Systems Performance Evaluation

Articles Reviewed:

Catch Me if You Can Evaluating Android Anti-malware against Transformation Attacks

Evaluating Performance of Android Platform Using Native C for Embedded Systems

Sleeping Android: Exploit through Dormant Permission Requests

Measuring and Improving Application Launching Performance on Android Devices

Analysis of Android Malware Detection Performance using Machine Learning Classifiers

SUT: Android

CUS: DVM

Analysis Method: Measurement and simulation

Profiling is not available in android so to measure performance “Debug” and “VmDebug” default libraries are used.

Benchmark used:

Finding a benchmark was a challenge here.

Benchmark uses should also be using DVM.

Benchmark chosen should have its source code available.

So from Android SDK seven common and popular applications were chosen and three default android applications were chosen which include Camera, Music Player and Calculator as a benchmark for comparing performance of DVM.

This is most interesting paper for evaluating Anti-malware software performance on android.

SUT: Android Anti malware softwares

CUS: Transformed Anti Malwares

Analysis Method: Measurement and simulation

Benchmark used:

Malware detection against following transformation metrics:

SUT: NDK

CUS: JNI vs native JAVA

Analysis Method: Measurement only

Benchmark used: JNI was used as benchmark in this study and native Java performance was measured against it.

Performance metrics:

Results show that native C/C++ used through JNI has better performance against all metrics except for memory access algorithms.

In Memory Access Native Java performs better than JNI in android.

SUT: Android Applications

CUS: Launching Speed and Performance

Analysis Method: Measurement and Simulation

Performance Metric: Launch time

Benchmark used: Default preload and no preload of classes were used as a benchmark against +51 classes , +120 classes and +281 classes.

Results:

SUT: Android Malwares and their detection using MLCs

CUS: Machine Level Classifiers Performance

Analysis Method: Measurement and Simulation

Performance Metric: True Positive Rate, False Positive Rate,Precision,

Workload : Malwares GoldDream, PJApps, DroidKungFu2, Snake, Angry Birds Rio

Unlocker

Benchmark selected: Naïve Bayesian, RandomForest, Logistic Regression, SVM: Support Vector Machine

Result : Shown interms of confusion matrix.

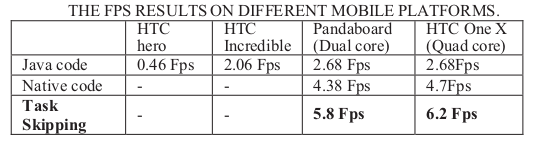

SUT: Traffoc Safety Guardian System on Android

CUS: TSG frames

Metrics: Car detection speed and fps

Workload: moving cars and highway lines

Benchmark: original fps without Task Skipping

Result: increased fps by using task skipping and JNI

Conclusion

Performance comparison in android devices still lacks analytical method. Mostly performance analysis is conducted using measurement or simulation which is time consuming. Benchmarks used for performance analysis are native implementations without suggested improvements and these are then compared with results of improvement implemented schemes / apps.

About the Author.

8 years’ experience in Nokia Siemens Networks and Ericsson, Intelligent Networks and Charging Systems

Humanist, philanthropist and Technologist

References

Performance Analysis of Android Underlying Virtual Machine in Mobile Phones ieeexplore.ieee.org/xpl/articleDetails.jsp?arnumber=6336470

Catch Me if You Can Evaluating Android Anti-malware against Transformation Attacks

ieeexplore.ieee.org/xpl/articleDetails.jsp?arnumber=6661334

Evaluating Performance of Android Platform Using Native C for Embedded Systems

ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=5669738

Sleeping Android: Exploit through Dormant Permission Requests

www.ma.rhul.ac.uk/static/techrep/2013/MA-2013-06.pdf

Measuring and Improving Application Launching Performance on Android Devices

ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6726978

Analysis of Android Malware Detection Performance using Machine Learning Classifiers

ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6675404

Enhancing Performance of Traffic Safety Guardian System on Android by Task Skipping Mechanism

ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6570137

Question No. 02 (30)

Make a complete list of metrics to compare

Two personal computers

Two database systems

Two disk drives

Two window systems

Performance metrics to compare Two personal computers

Throughput: Processing speed, Memory speed, bus speed.

Response time: boot time, disks RPM / IO speed battery Discharge Curve

Resource: Disk and memory capacity, number of ports. Display technology used (LCD or LED)

In addition to above following are performance metrics of individual components for comparing two PCs

CPU performance metrics: clocking speed,L1/L2/L3 cache size

Graphics card performance metrics: Graphics Processing Clusters, Streaming Multiprocessors,CUDA Cores, Texture Units,ROP Units, Base Clock, Boost Clock, Memory Clock (Data rate),L2 Cache Size, Total Video Memory, Memory Interface, Total Memory Bandwidth, Texture Filtering Rate (Bilinear),Fabrication Process used, Transistor Count, Connectors used, Form Factor, Power Connectors quality, Thermal Design Power (TDP),Thermal Threshold

Throughput: TPS, w/s ,r/

Response time: indexing time, query time, loading time, database lock time, measuring concurrent operations and lock percentage. Crash recovery time , connection time, Deadlock discovery and resolution time

Resource Resources consumed in Journaling, processing overhead, I/O on underlying disks (supported block size), load on db server, load on network

In addition to above following performance metrics can be used depending upon SUT

Schema comparison

Timeliness and freshness of data metrics under multiple load conditions.

I plan to compare Berkeley DB, MongoDB and oracle in future provided research yields financial benefits

Throughput: Short stroking

Response time: Seek time, Data transfer rate, Media rate, Sector overhead time, Head switch time, Cylinder switch time,

Resource: power consumption,

Magnetic material lifetime, size, weight, shock absorbent quotient value

Audible noise, Shock resistance,

Startup time

Backwards compatibility, Software compatibility,

File copy operations (newer windows system is more optimized)

CPU Usage,cores,

Memory management. Display, graphics

Security, Data execution prevention, Netbook support

Availability of official support, BranchCache support (new feature to speed network ,absent in older versions)

Minimum Resources constraint ( e.g older windows could run on lower slower processors and does not require much RAM)

Kernel type (hybrid or native)

Platform support (supports both 32 and 64 architectures or only one of them)

Physical Memory Limits

About the Author.

8 years’ experience in Nokia Siemens Networks and Ericsson, Intelligent Networks and Charging Systems

Humanist, philanthropist and Technologist